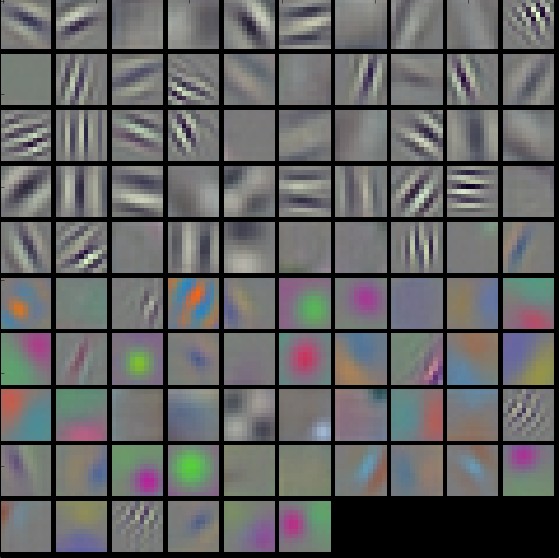

Pretrained filters of AlexNet¶

The first layer of the AlexNet model gives the following filters.

They are nice and smooth, which indicates the network has trained!

Note, that there are other visualization tools for the inner parameters of artificial neural networks!